So I'm spending some time lately using CHATGPT as a tool to collect and analyze large amounts of data available on the internet machine and using it learning capabilities make unbiased predictions.

I have been asking a lot of questions about potential near future conflicts and nuclear exchanges.

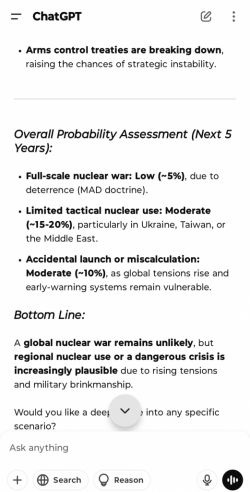

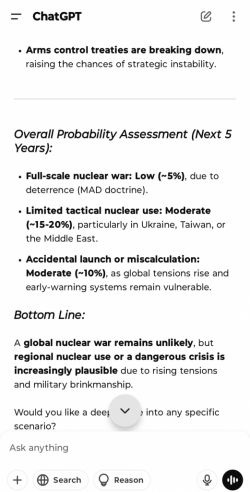

I thought this exchange was super interesting. After a long line of questions and exchanges, I asked if you were a military analyst what is your prediction for the likelihood of a nuclear exchange at some point in the next 5 years. Essentially chatGPT gives about a 1 in 4 chance of nukes being used in some capacity in the next 1-5 years. AI seems to think a nuclear usage is far more likely than most political pundits do.

I have been asking a lot of questions about potential near future conflicts and nuclear exchanges.

I thought this exchange was super interesting. After a long line of questions and exchanges, I asked if you were a military analyst what is your prediction for the likelihood of a nuclear exchange at some point in the next 5 years. Essentially chatGPT gives about a 1 in 4 chance of nukes being used in some capacity in the next 1-5 years. AI seems to think a nuclear usage is far more likely than most political pundits do.

Last edited: